Why I recommend Chrome to family...

Thoughts and observations on the ecosystem of security bugs

Thoughts and observations on the ecosystem of security bugs

There’s been a lot of discussion lately around the ecosystem of security bugs - from the blog post by Oracle about reverse engineers and bug bounties to the unfortunate grsecurity announcement. Normally, I sit back and think “wow” as I close Twitter and get back to it, but have wanted to collect and share some thoughts on this topic for sometime.

The ecosystem of security bugs is very complex - history shows the importance of secure software architectures and mitigation controls. I believe sound architectures also leads to a better understanding and management of security threats.

I focus this post around Chrome as it’s a complicated piece of software which I believe the Google security team have done an excellent job maturing over the years. I personally recommend Chrome often for browsing to friends and family who are concerned about malware and the like and there’s actually quite a bit of rationale as to why.

This post first emphasises the importance of secure software architectures and hardened platforms and then looks at a few examples of important and strategic security research that’s helped strengthen the softwares security posture.

I then spend some time to go over a few relevant considerations for those managing security threats and bring caution to the “Drain the swamp” analogy that I believe is a risky pitfall when preparing threat management security activities.

Reading time: between 10-20 mins

The theme of defining logical boundaries in software and then implementing containment and platform hardening approaches has slowly had its emphasis migrate from critical server side daemons when remotes were prevalent to client-side software for well over a decade.

One of the more commonly known daemon implementations is in OpenSSH with privsep by Niels Provos, however there are numerous other examples around this time, one of the pioneers of privilege separation was vsftpd by Chris Evans, where his vsftpd design notes have this great excerpt:

“Unfortunately, this is not an ideal world, and coders make plenty of mistakes. Even the careful coders make mistakes. Code auditing is important, and goes some way towards eliminating coding mistakes after the fact. However, we have no guarantee that an audit will catch all the flaws.”

As a teenager I remember first looking at Mark’s pre-auth OpenSSH challenge-response bug back in 2002 and how if privilege separation was enabled it would land an attacker in a chroot jailed and unprivileged child process. This type of hurdle is an immediate mitigating factor requiring a pivot and a glaring one at the time was via Linux/BSD kernel flaws to break the chroot and continuing. I actually presented on this precise topic at the first Ruxcon while also realising I had never done any public speaking before.

From my perspective strengthening kernels has been a critical pursuit for many years and I’ve personally seen grsecurity/PaX as a key project to help set a standard for major operating systems to harden userland, tighten low-level OS controls and help raise the cost of attacking bug classes or boundaries. As these various controls can raise costs and some bugs can have their value considerably lowered, it can consequently result for many to move to another vector where an attack is cheaper to identify and pursue.

Over the past several years when client-side software and mobile platforms have adopted similar containment approaches the same types of principles often apply. Watching pwn2own unfold over the years reaffirms this to me - targets that don’t adopt a security conscious architecture in their foundation fall quickly and cheaply as bugs can have an immediate critical impact.

The blog series (1, 2) by Azimuth on the Chrome architecture is useful to illustrate the main attack paths well. When Vupen demonstrated exploiting Flash and first tookout Chrome at pwn2own (even if it was preempted) via the non-sandboxed plugin it again demonstrates the emphasis of strong software architectures and attackers typically opting to take the cheapest path.

In the web world where the bar for identifying and exploiting vulnerabilities would be generally considered much lower and the impact can often be crippling, the subject of secure architectures in mid-tier web stacks and web frameworks is interesting and deserves its own case study.

Hardened systems and secure architectural decisions in software resonate a mature approach to building secure systems and appears to be often coupled with developing an understanding both of the immediate attack surface but also attack chains.

I find Chrome an excellent case-study for this topic and enough time has passed to look at a few things in retrospect mostly focusing on system integrity.

Google have their internal Chrome security team and undoubtedly a lot of work happens behind the scenes. In this post I’d like to highlight a few interesting pieces of public technical research which I think has been important to help evolve their security posture in a strategic way to where it is now.

As far as public community involvement there’s been the bug bounties for Chrome. Going over the payouts along with their chrome awards demonstrates a clear and mature distinction on various bug classes (code execution, UXSS, infoleaks, etc.) and also then by severity and submission quality.

It would be easy for someone to see vulnerability disclosures and software updates each week and get lost how to interpret it all - however slapping a risk rating on any vulnerability is not so black and white. A few years ago I spent some time to research vulnerability discussion and hype on Twitter to try to add color and discussion-driven timelines to CVE’s for this reason.

There are a few specific research points about security bugs related to more abstract vectors, library attack surface and also target specific attacks I’d like to go over.

The IPP code in cupsd for sometime was an interesting potential vector to try to attack Chrome on Linux but the whole cross-origin thing was a fairly effective procrastinative excuse to me. One morning I woke up to read the amazing guest P0 post by Neel who pulled off that attack in style.

This work was a great example of understanding abstract vectors - via a XSS in the CUPS templating engine a malicious webpage could bypass the browser security constraint to then open a new vector to exhaust the huge C-based IPP parsing code-base that’d sat quietly and peacefully thanks to the old binding-to-localhost obscurity technique.

This attack is platform specific and also not an immediate attack surface but takes a level of understanding of the full stack to be able to design and then the skill to uncover exploitable bugs in this attack path. At the end of Neel’s post is a perfect example of follow-up hardening specific to this vector.

This raises a point about bugs affecting non-default configurations that may seem like the most unsexiest type of vulnerability, however this lack of appeal also can make them an easier attack path in many code bases. In an era where there’s actors who need to consider the economics of burning exploits, bugs that affect non-default configurations would hold value for target-specific usage.

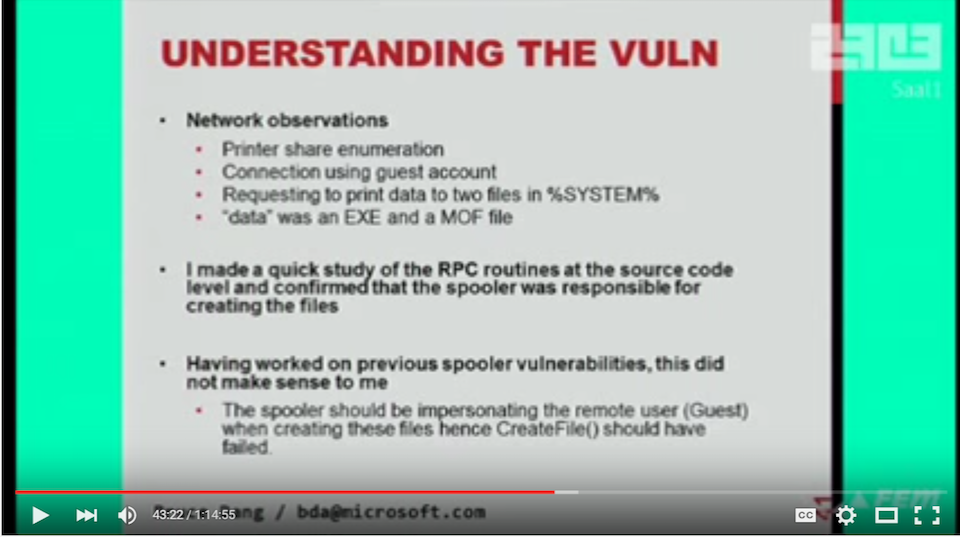

One example where I believe this may be applicable is the printer-spooler bug that was used in Stuxnet, described in the awesome CCC talk by Bruce Dang.

Sometimes non-default options can have quite extensive attack surface or even systematic issues that were addressed in main components but have been neglected elsewhere.

There is also the situation of libraries and external dependencies - many libraries are there for heavy lifting and often come with a significant attack surface such as WebKit that have also proved fruitful for countless who got in early running a web-rendering fuzzer. Another example is jduck’s libstagefright Blackhat talk as a ripe attack surface that spans many projects.

However for targets such as Chrome (or Android) on modern up-to-date platforms, the first hurdle for exploitation is usually userland mitigating controls and then if there is sandboxing of the library component, it will likely require a pivot to affect the full integrity of the system.

Back in 2010 Tavis and Julien presented their There’s a party at ring0… presentation, mentioning the importance (or even dependency) of kernel security for user-land sandboxing. The topic of attacking the kernel attack surface (e.g. GDI) via Chrome to bypass the sandbox and user-land mitigating controls to me highlights a lot of consideration in both attack surface across privilege boundaries but also exploit economics.

This is also one reason why j00ru’s One font vulnerability to rule them all research was quite epic, because the initial vector in was a bug in Adobe Reader and then the sandbox escape came by exploiting the exact same bug in the shared codebase in the Windows GDI.

One of the most valuable aspects of running events like pwn2own in my opinion is getting in-depth attack trees that demonstrate intricate vectors that run through components of complicated software.

The two part blog series of A Tale of Two Pwnies (1, 2) shows off two individuals who’ve dedicated time and technical skill to understand software beyond a shallow layer and then craft attacks. I interpret this as displaying their calibre following somewhat flamboyant attack paths that’s beyond the norm or maybe what’s necessary - and while functional exploits of these are worth a lot, for the participants the reward plus recognition to me pays off if you look beyond a dollar figure.

Receiving such specific technical details and sitting down with an attacker about their approach and execution is incredibly valuable to gain insights on an attackers intuition that can extend a threat model beyond what’s been preempted. When reaching this level of maturity there is an idealistic state where both vulnerabilities and exploits become target specific (and not generic bug-classes/exploit techniques) and results in the bar rising substantially and consequently the threat actors shifting.

When attacks require more niche intricate research to craft (e.g. Mark’s 2008 Application-Specific Attacks: Leveraging the ActionScript VM) and make reliable, the economics of its use change considerably too, and the sooner we get to this point for critical infrastructure, software and platforms the better.

I’ve seen many presentations by Haroon over the years, however I think one of my favourites was Penetration Testing considered harmful back in 2011, which is when he uses the “Drain the swamp” analogy in comparison to penetration testing - i.e. lose sight of your initial objective. I personally see this analogy being perfect for many activities related to defending applications or organisations.

“when you’re up to your neck in alligators, it’s easy to forget that the initial objective

was to drain the swamp”

While all activities can serve a valuable purpose it’s important to be a realist and carefully consider your current level of maturity and objective before jumping in.

I believe Chrome have responded to their complicated threat landscape quite well via strong foundations and research-driven offense and defense activities working in unicy. However, choosing which security activities and prioritising them really requires careful thought on a case-by-case basis.

In this section I look at a few specific subjects and share some perspective related to managing security bugs baring in mind the “Drain the swamp” analogy.

Penetration testing can come in many approaches and is a fairly fundamental activity for identifying weaknesses and guiding improvements. I then see bug bounties as a fairly progressive activity that can show maturity and promote an open channel to security researchers, which Google (and many others) have run for some years.

Having third parties perform penetration tests and also running public bug bounties can each come with pros and cons and require careful evaluation before commencing and need adequate preparation and readiness to be responsive for them to have a real ROI.

Related to some of Haroons’ concern of penetration testers introducing risk brings up an interesting point: for public bug bounties of applications and infrastructure, if you make a dollar value for bugs then suddenly bug bounty hunters can become a new primary threat actor.

And one key point relates to the assurances of their data safe-guarding methods and maturity should they get access to systems or internal data. Suddenly the scenarios that IP is being sent plain-text via connect-back shells or sent around via social media becomes plausible and high-risk.

All security activities will have varying levels of ROI and understanding the threat landscape and current maturity helps to prioritise a budget. Web-based attacks generally have a lower bar for entry and there’s a lot of resources to help get people started and grow skills quickly, and yet sometimes we see web bounties paying high amounts for common bugs that are relatively cheap to identify and exploit. Perhaps in such instances when the ROI for bugs is so askew while also introducing risk to the organisation, focusing on other activities may have been wiser first.

Another point of Haroons’ presentation is how a few penetration testers could each successfully compromise a target but all take different paths. The issue I see is it seems testers and bug hunters are often objective-focused rather than assurance-focused, with a general objective being successfully exploiting a bug in something important and get paid. Assurance-focused however would be to focus on breadth and then prioritising depth and coverage for each layer based on experience of what’s most likely for that target, which is completely different.

From both my own experience and observing publicised vulnerabilities over some years, systemic patterns are repeated throughout branches and why it’s important to leverage on both whitebox (i.e. code review) and blackbox security assessments. When it’s merely blackbox testing and fuzzing, some days watching bug hunting is reminiscant of the game Hungry Hungry Hippos and reminds me of this recent tweet by Stefan:

With many bugs all eyes are shallow.

— Stefan Esser (@i0n1c) August 14, 2015Fabs’ paper on Vulnerability Extrapolation a few years ago was to me a refreshing piece of work that trys to dig layers beneath the surface that can have a wider tangible effect than squashing an individual bug. I mentioned the OpenSSH challenge-response bug earlier - an example is this vulnerability had an updated advisory because that same int overflow construct had to be patched in the PAM module too.

While blackbox testing may successfully find bugs, a whitebox may be able to efficiently find a few systemic patterns where these flaws originate and can be addressed in a more broad-sweeping way than isolated patching.

Charlie’s Babysitting an Army of Monkeys presentation made me walk out of that Cansecwest room not feeling so warm and fuzzy at the volume of bugs from 5 lines of Python, but some of the fuzzing statistics in this talk on uniques and manifestations of single vulnerabilities was actually quite interesting.

Google would certainly have a lot of experience dealing with a mixed bag of vulnerability submissions, both in terms of types of bugs but also quality. I think accepting submissions and triaging can be something quite hard to do in practice for many and like everything in security it needs to keep evolving and adapting.

All bugs whether internally discovered or via a submission (or coincidentally, both) require some form of verification and triaging so it can be responded to - for advisories with a good amount of detail and accompanying PoC this often works well.

However I can’t help but feel a small bit of empathy reading the Oracle blog post and what must be an oversaturation of poor quality bug submissions out there in general that affects the opportunity and approach of genuine and skilled bug hunters.

lcamtuf recently wrote on his blog about Understanding the process of finding serious vulns and shares starting an interesting survey by reaching out to the discoverers for a sample of CVE’s on their methodology and communication channels. These are important questions and it will be interesting to follow the results - one question I quite like relates to the motivation of the researcher.

In my opinion it’s important to factor in motivation and even experience when managing submissions. An experienced assurance-focused bug hunter may traditionally need to spend days or more preparing a PoC on an individual bug to be treated seriously, when maybe they could argue from experience at a code-level potential exploitable flaws.

If it’s known their motivation is on assurance then it may be easier to keep them focused on gathering a more intimate understanding of the target which is needed to come up with results beyond typical vulnerabilities with accompanying exploits.

There’s a related point where for some targets it should be beyond “bugs” and also encompasses attack surface reduction and defense in depth approaches for potential risks that are very costly to persue or even theoretical in nature. Sometimes such issues can exist for some time and via a small change in circumstance (a code change or a new technique surfaces) a potential technical risk can become a vulnerability that’s practical to exploit.

There’s finally the topic of OS security and exploit mitigations, which I believe Google understand well and have worked on hardening their dependencies on platform security. Ultimately one of the key security benefits of an up to date platform is to help raise the cost for attacks and is a dependency for effective application sandboxing - I’ll share a few angles why I think it’s important.

Matt Miller’s Ruxcon Breakpoint presentation in 2012 was great to see his logical breakdown from his experience at Microsoft working on exploit mitigations. Mitigation level bypasses may be considered much more valuable than critical vulnerabilities and it’s easy to forget kernels and mitigations are pieces of software that have bugs and mature regularly on major platforms with fixes and new techniques.

The topic of OS security, sandboxing and exploit mitigations has been fascinating to watch evolve over the years. In terms of projects a critical project for years has been grsecurity and another interesting project to note is Google’s seccomp that’s worth reading about.

A piece of kernel exploit mitigation research from last year I liked relates to the iOS work by Tarjei on the iOS Early Random PRNG focusing on weaknesses in iOS 6 kernel mitigations, which was a follow-up piece of work after his presentation with Mark on iOS 6 security at HITB.

While the aforementioned research is a single piece of work on a certain mobile platform, it demonstrates to me the intricacies of quite a complicated arms race and why platform exploit mitigations have been and remain so important.

I believe the ecosystem for security bugs in Chrome is tightly managed and controlled because they understand the economics of attacks affecting system integrity. This is why I recommend Chrome (either on a Chromebook, grsec hardened Linux, or an up to date Windows) to family and friends when they bring up a recent tale about “viruses”.

The short answer to “why?” is usually “because Java” out of laziness, but there’s obviously been a lot of strategic well-thought out research and activities beyond the obvious things such as automatic updates, safebrowsing, smart UX, bug bounties, etc.

At the core of it, it’s about raising the cost of attacks after building your threat model then continuously maturing it over time. For something as complicated as a browser, this requires a lot of consideration and skilled execution over years and I’m thankful it’s been in good hands.

Having said that, it’s also easy to become overly myopic on intricate technical problems and not dedicating resource to things outside of focus. There are endless examples for needing to regularly take a step back and considering susceptibility to various other vectors affecting key assets, a couple of examples being the recent Mozilla Bugzilla hack and the Reddit thread on Chrome’s password security strategy.

I previously mentioned that the Flash exploit path used by Vupen was preempted at a pwn2own - while clearly it was successfully exploited and correctly proved a point at the time, this path was also known to be weak (or even seen as the weakest/most likely) and I’m sure hardening was on the roadmap even if it wasn’t ready.

To me that’s a perfect example of understanding your threat model and being a realist - some things do take time to improve but with each small iteration of hardening it’s important to have a tangible effect and in the meantime considering defensive countermeasures. Not everyone has Google-like resources however, but with this mindset and attitude it’s possible to make incremental improvements efficiently by first understanding the problem you’re up against.

Finally it really is a shame to see the announcement about grsecurity. I personally see most technology users having benefited from it in one way or another over the years. If it makes a comeback, consider showing support if you haven’t already done so.

Salut,

Why I recommend Chrome to family...

December 2025 - ORM Leaking More Than You Joined For

November 2025 - Gotchas in Email Parsing - Lessons From Jakarta Mail

March 2025 - New Method to Leverage Unsafe Reflection and Deserialisation to RCE on Rails

October 2024 - A Monocle on Chronicles

August 2024 - DUCTF 2024 ESPecially Secure Boot Writeup

July 2024 - plORMbing your Prisma ORM with Time-based Attacks

elttam is a globally recognised, independent information security company, renowned for our advanced technical security assessments.

Read more about our services at elttam.com

Connect with us on LinkedIn

Follow us at @elttam